HiveNet harnesses the formidable capabilities of RKE2 and K3s, two advanced Kubernetes distributions, to create an unrivaled distributed system.

At Veritas Automata, we don't just believe in automation; we embody it, delivering transformative solutions across industries.

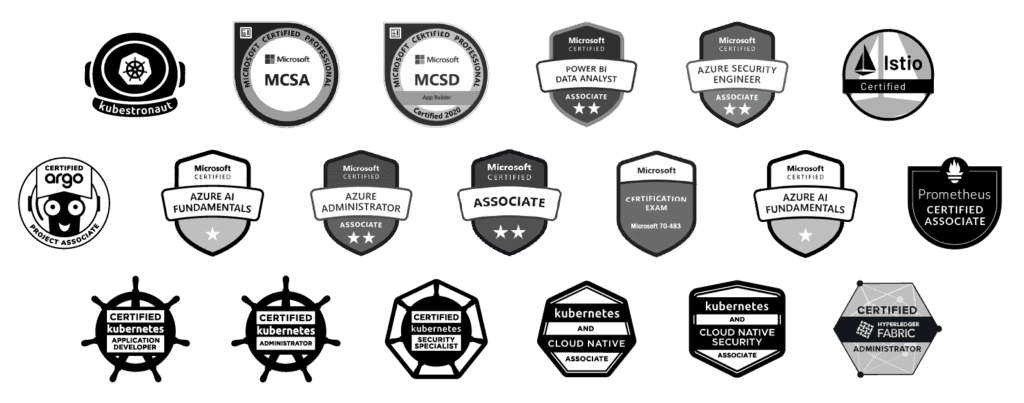

The core of HiveNet’s architectural superiority lies in the integration of RKE2 and K3s. Both are binary programs based on BusyBox, to ensure a lightweight and secured runtime solution for Kubernetes. RKE2, known as Rancher Kubernetes Engine 2, is not just any enterprise Kubernetes solution, it’s built for the most compliance-sensitive environments. K3s is the lightweight Kubernetes solution, optimized for edge computing with a focus on efficiency and simplicity. The fusion of RKE2 and K3s in HiveNet is not just a feature, it’s a statement of our capability to excel in various environments, from cloud infrastructures to the most challenging edge computing scenarios.

Integration and Interoperability

We leverage Kubernetes not as a tool, but as a foundation, enabling HiveNet to integrate effortlessly with the entire Veritas Automata ecosystem. More than just supporting IoT and Smart Products, it’s redefining how these technologies interact and transform business processes. With HiveNet, we’re not just managing data flow or workflows, we’re orchestrating the future of cohesive technology integration. While the HiveNet Core lives on the cloud and constitutes the distributed system memory, acting as the “main brain,” the HiveNet nodes live on the edge of the HiveNet network.

Deployment Flexibility

HiveNet breaks the mold of traditional computing environments. Our platform is not confined to mere cloud or static environments, it’s built for agility; capable of being deployed anywhere from the cloud to laptops, and soon, mobile devices. This isn’t just flexibility, it’s a revolution in deployment–adaptable for businesses of any scale–from startups to global enterprises. The private network encompasses all HiveNet deployments; the future ability to run its distributed extensions will act as an offline private model, bridged by a secured gateway only.

Blockchain Integration and Smart Contract Management

At the heart of HiveNet’s functionality is the integration of HyperLedger Fabric. This is not just about managing transactions, it’s about reimagining chain-of-custody and transactional workflows on a global scale. In sectors where data integrity, traceability, and security are non-negotiable, HiveNet emerges as the ultimate solution.

Observability and AI/ML Integration

Observability is a core feature of HiveNet and is entirely confidential when operating behind the VPN, serving as a key factor for ensuring data isolation. We use tools like Thanos, Prometheus, and Grafana not just to monitor, but to also empower insights into system performance. The integration of AI/ML capabilities, particularly in edge and cloud scenarios, is a leap toward predictive analytics, optimization, and real-time decision support.

Business Use Case: HiveNet Deployment for Clinical Trial Management in Life Sciences.

In the life sciences sector, managing clinical trials presents a myriad of challenges, including data integrity, participant privacy, regulatory compliance, and the efficient coordination of disparate data sources. The complexity of clinical trials has increased, with growing amounts of data and more stringent regulatory requirements. The need for a secure, scalable, and flexible system to manage this data has never been more critical. Traditional systems often struggle with these demands, leading to inefficiencies and increased costs.

HiveNet addresses the unique needs of the life sciences vertical by providing a distributed system designed for high compliance and efficiency. Key benefits include:

HiveNet addresses the unique needs of the life sciences vertical by providing a distributed system designed for high compliance and efficiency. Key benefits include:

- Robust Data Security and Compliance

- Efficient Data Management at Scale

- Seamless Integration with Existing Systems

- Flexible Deployment Options

- Blockchain for Data Integrity and Traceability

- Advanced Observability and AI/ML Insights

Business Impact

For a biotechnology company conducting a global clinical trial for a new therapeutic drug, deploying HiveNet resulted in:

- Enhanced Data Security: Meeting stringent regulatory requirements for data protection and patient privacy, thereby reducing compliance risks.

- Improved Efficiency: Streamlining the collection, analysis, and reporting of trial data, thus accelerating time to insights and decision-making.

- Increased Scalability: Efficiently managing increased data volumes and participant numbers without compromising system performance.

- Data Integrity and Trust: Ensuring the integrity of trial data across multiple sites, which is crucial for regulatory approval and market trust.

HiveNet, with its strategic use of RKE2 and K3s within the Kubernetes framework, is not just a testament to Veritas Automata’s expertise in distributed systems; it’s our mission to provide a steadfast commitment to efficiency, security, and scalability.

We don’t just create products; we are setting the course for the future of business technology, echoing our unwavering vision of “Trust in Automation.”

We don’t just create products; we are setting the course for the future of business technology, echoing our unwavering vision of “Trust in Automation.”