Welcome to the intricate dance of Kubernetes, where the harmonious choreography of microservices plays out through the pivotal roles of readiness and liveness probes. This journey is designed for developers at all levels in the Kubernetes landscape, from seasoned practitioners to those just beginning to explore this dynamic environment.

Here, we unravel the complexities of Kubernetes programming, focusing on the best practices, practical examples, and real-world applications that make your microservices architectures robust, reliable, and fault-tolerant.

Kubernetes, at its core, is a system designed for running and managing containerized applications across a cluster. The heart of this system lies in its ability to ensure that applications are not just running, but also ready to serve requests and healthy throughout their lifecycle. This is where readiness and liveness probes come into play, acting as vital indicators of the health and state of your applications.

Readiness probes determine if a container is ready to start accepting traffic. A failed readiness probe signals to Kubernetes that the container should not receive requests. This feature is crucial during scenarios like startup, where applications might be running but not yet ready to process requests. By employing readiness probes, you can control the flow of traffic to the container, ensuring that it only begins handling requests when fully prepared.

Liveness probes, on the other hand, help Kubernetes understand if a container is still functioning properly. If a liveness probe fails, Kubernetes knows that the container has encountered an issue and will automatically restart it. This automatic healing mechanism ensures that problems within the container are addressed promptly, maintaining the overall health and efficiency of your applications.

Best Practices for Implementing Probes

Designing effective readiness and liveness probes is an art that requires understanding both the nature of your application and the nuances of Kubernetes. Here are some best practices to follow:

Define Specific Endpoints

Create dedicated endpoints in your application for readiness and liveness checks. These endpoints should reflect the internal state of the application accurately.

Avoid False Positives

Carefully set probe thresholds to avoid unnecessary restarts or traffic routing issues. False positives can lead to cascading failures in a microservices architecture.

Use Delays and Timeouts Wisely

Configure initial delay and timeout settings based on the startup time and expected response times of your services.

Monitor and Adjust

Continuously monitor the performance of your probes and adjust their configurations as your application evolves.

Mastering readiness and liveness probes in Kubernetes is like conducting a ballet. It requires precision, understanding, and a keen eye for detail. By embracing these concepts, you can ensure that your Kubernetes deployments perform gracefully, handling the ebbs and flows of traffic and operations with elegance and resilience. Whether you are a seasoned developer or new to this landscape, this guide is your key to choreographing a successful Kubernetes deployment.

Consider implementing probes to enhance system stability and provide a comprehensive overview. Ensuring a health endpoint is integral, and timing considerations are crucial. Probes act as a valuable tool for achieving high availability.

At Veritas Automata, we utilize liveness probes connected to a health endpoint. This endpoint assesses the state of subsequent endpoints, providing information that Kubernetes collects to ascertain liveness. Additionally, the readiness probe checks the application’s state, ensuring it’s connected to dependent services before it is ready to start accepting requests.

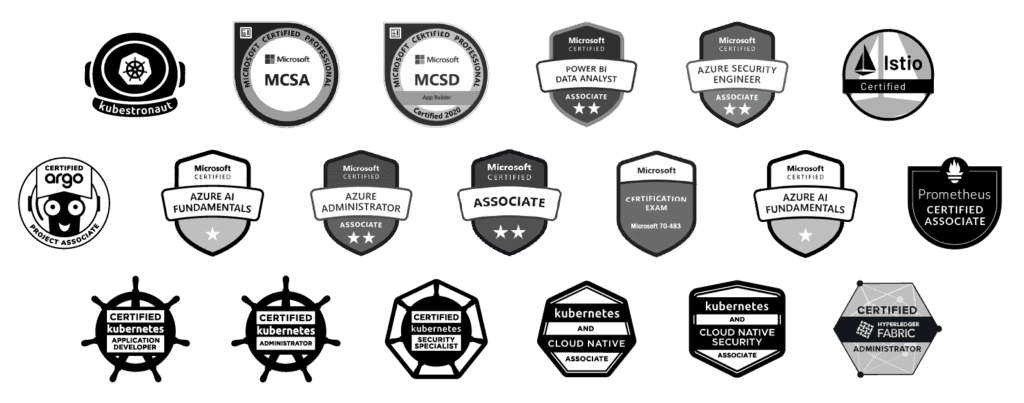

I have the honor of presenting this topic at a CNCF Kubernetes Community Day in Costa Rica. Kubernetes Day Costa Rica 2024, also known as Kubernetes Community Day (KCD) Costa Rica, is a community-driven event focused on Kubernetes and cloud-native technologies. This event brings together enthusiasts, developers, students, and experts to share knowledge, experiences, and best practices related to Kubernetes, its ecosystem, and its evolving technology.