This concern originates from many sources and is echoed by the Artificial Intelligence industry, researchers, and tech icons like Bill Gates, Geoffrey Hinton, Sam Altman, and others. The concerns are from a wide array of points of view, but they stem from the potential ethical risks and even the apocalyptic danger of an unbridled AI.

Many AI companies are investing heavily in safety and quality measures to expand their product development and address some of the societal concerns. However, there’s still a notable absence of transparency and inclusive strategies to effectively manage these issues. Addressing these concerns necessitates an ethically-focused framework and architecture designed to govern AI operation. It also requires technology that encourages transparency, immutability, and inclusiveness by design. While the AI industry, including ethical research, focuses on improving methods and techniques. It is the result of AI, the AI’s response, that needs governance through technology reinforced by humans.

This topic of controlling AI isn’t new; science fiction authors have been exploring it since the 1940s. Notable examples include “Do Androids Dream of Electric Sheep?” by Philip K. Dick, “Neuromancer” by William Gibson, “The Moon is a Harsh Mistress” by Robert A. Heinlein, “Ex Machina” by Alex Garland, and “2001: A Space Odyssey” by Sir Arthur Charles Clarke.

David Brin writes in Artificial Intelligence Safety and Security, that our civilization has learned to rise above hierarchical empowerment through application of accountability. He wrote, “The secret sauce of our [humanities] success is – accountability. Creating a civilization that is flat and open and free enough – empowering so many – that predators and parasites may be confronted by the entities who most care about stopping predation, their victims. One in which politicians and elites see their potential range of actions limited by law and by the scrutiny of citizens.”

“I, Robot” by Isaac Asimov, published on December 2, 1950, over 73-years ago is a collection of short stories that delve into AI ethics and governance through the application of three laws governing AI-driven robotics. The laws were built into the programming controlling the robots and their response to situations, and their interaction with humans.

Robbie (1940) - Use-case:

Runaround (1942) - Use-case:

Reason (1941) - Use-case:

Catch That Rabbit (1944) - Use-case:

Liar! (1941) - Use-case:

Little Lost Robot (1947) - Use-case:

Escape! (1945) - Use-case:

Evidence (1946) - Use-case:

The Evitable Conflict (1950) - Use-case:

Going beyond the current societal concern, and focusing on moving toward implementation of a set of laws for AI operation in the real world, and the technology that can be brought together to solve the problem. Building on the work from respected groups like the Turing Institute and inspired by Asimov, we identified four governance areas essential for ethically-operated artificial intelligence, we call them, “The Four Laws of AI”:

Do No Harm

Ethical Adherence

Preservation Ethics

Accountable Transparency

We suggest the application of the Four Laws of AI could rest primarily in the evaluation of AI responses using a second AI leveraging Machine Learning (ML) and the solution below to assess violation of The Four Laws. We recognize that the evaluation of AI responses will be extremely complex itself and require the latest machine learning technologies and other AI techniques to evaluate the complex and iterative steps of logic that could result in violation of Law 1 – “Do No Harm: AI must not harm humans or, through inaction, allow humans to come to harm, prioritizing human welfare above all. This includes actively preventing physical, psychological, and emotional harm in its responses and actions. “

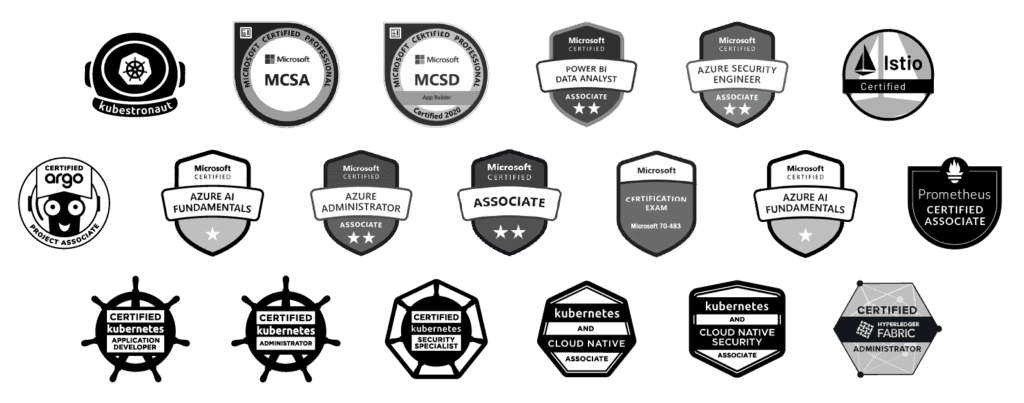

In 2020, at Veritas Automata, we first delivered the architectural platform described below as part of a larger service delivering an autonomous robotic solution interacting with consumers as part of a retail workflow. As the “Trust in Automation” company we needed to be able to leverage AI in the form of Machine Learning (ML) to make visual assessments of physical assets, use that assessment to trigger a state machine, to then propose a state change to a blockchain. This service leverages a distributed environment with a blockchain situated in the cloud as well as a blockchain peer embedded on autonomous robotics in the field. We deployed an enterprise-scale solution that leverages an integration of open source distributed technologies, namely: distributed container orchestration with Kubernetes, distributed blockchain with HyperLedger Fabric, machine learning, state machines, and an advanced network and infrastructure solution. We believe the overall architecture can provide a starting point to encode, apply, and administer Four Laws of Ethical AI for cloud based AI applications and eventually embedded in autonomous robotics.

Machine Learning (ML) Integration

ML in this case will be a competitive AI focused specifically on gauging whether the primary AI response does not violate The Four Laws of AI. This component would leverage the latest techniques with reinforcement learning models continuously refined by diverse global inputs, to align AI responses with the Four Laws requirements.

State Machines

These act as intermediaries between ML insights and actionable outcomes, guiding AI responses to ensure adherence to The Four Laws. The state machines translate complex ML assessments into clear, executable directives for the AI, ensuring that each action taken is ethically sound and aligns with the established laws.

Blockchain

Enterprise-Scale Kubernetes

Veritas Automata utilizes Kubernetes at an enterprise scale to manage and orchestrate containerized applications. This is particularly important for deploying and scaling AI solutions like LLMs across various environments. Kubernetes ensures high availability, scalability, and efficient distribution of resources, which is essential for the widespread application of ethical AI principles.

Distributed Application Framework

From our experience at Veritas Automata, we believe this basic architecture could be the beginning to add governance to AI operation in cooperation with AI systems like Large Language Models (LLMs). The Machine Learning (ML) components would deliver assessments, state machines translate these assessments into actionable guidelines, and blockchain technology provides a secure and transparent record of compliance.

As Brin writes, “In a nutshell, the solution to tyranny by a Big Machine is likely to be the same one that worked (somewhat) at limiting the coercive power of kings and priests and feudal lords and corporations. If you fear some super canny, Skynet-level AI getting too clever for us and running out of control, then give it rivals, who are just as smart, but who have a vested interest in preventing any one AI entity from becoming a would-be God.” Our approach to ethical AI governance is intended to be a type of rival to the AI itself giving the governance to another AI which has the last word in an AI response.