The symphony is not just a fusion of cloud and edge technologies, it's a harmonious blend of trust, clarity, efficiency, and precision.

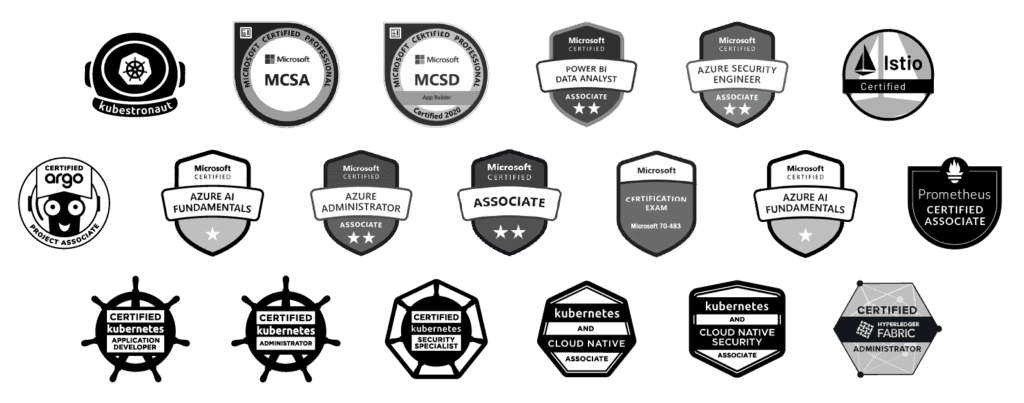

HiveNet's Foundation: Powering Innovation with Open Source

At the heart of HiveNet lies a foundation, built on open-source K3s Kubernetes and RKE2 – a pre-integrated cloud (AWS) and edge cluster (bare-metal) on the same control plane. This architecture is the cornerstone for the HiveNet Application Ecosystem, providing unparalleled flexibility and scalability.

Orchestrating the Cloud and Edge Dance

HiveNet for Blockchain – a jewel in HiveNet’s crown – simplifies the distribution of processes across roles, organizations, corporations, and governments.

This solution can manage chain-of-custody at a global level, seamlessly integrating with other HiveNet tools to support IoT and Smart Products within broader workflows. Imagine a symphony where each note is perfectly timed and in sync – that’s HiveNet for Blockchain, using the same Kubernetes framework as all HiveNet products. This ensures standardized solutions and efficient deployment of the platform core to the cloud, with the extended edge nodes running on smart devices such as embedded devices, edge computers, laptops–and soon, mobile devices–for enhanced workflow integration.

This solution can manage chain-of-custody at a global level, seamlessly integrating with other HiveNet tools to support IoT and Smart Products within broader workflows. Imagine a symphony where each note is perfectly timed and in sync – that’s HiveNet for Blockchain, using the same Kubernetes framework as all HiveNet products. This ensures standardized solutions and efficient deployment of the platform core to the cloud, with the extended edge nodes running on smart devices such as embedded devices, edge computers, laptops–and soon, mobile devices–for enhanced workflow integration.

Hyperledger Fabric: Crafting Trust and Transparency

In the orchestra of blockchain technologies, HiveNet employs Hyperledger Fabric to meet data access, regulatory, workflow, and organization needs. This ensures a secure and transparent transaction management system and establishes a foundation for chain-of-custody applications.

Use Case: Smart Product Manufacturing

Let’s step into the real-world impact of HiveNet’s orchestration prowess with an example of smart product manufacturing:

Picture a market category leader in the manufacture and operations of smart products for retail and food.

The challenge was clear… finding a path to providing operators the ability to deliver successful interactions with consumers and delivery partners while producing valuable and auditable transactional data.

Veritas Automata took on this challenge by developing an autonomous transaction solution, starting in 2018.

Leveraging Hyperledger Fabric blockchain technology on Kubernetes with Robot Operating System (ROS) for hardware control and ML-powered vision, the HiveNet-powered robotic solution became a reality by 2020. Cloud services integrated seamlessly with bare-metal edge computing devices, creating a multi-tenant cloud-based K3s Kubernetes cluster.

The outcome? Over 20 IoT components in each system, autonomous interactions between the robotic system and consumers, synchronized workflow control, and separate cloud API integrations for each operator. Veritas Automata’s HiveNet solution delivered unparalleled capabilities, supporting the manufacturer’s business requirements and maintaining market category leadership.

The outcome? Over 20 IoT components in each system, autonomous interactions between the robotic system and consumers, synchronized workflow control, and separate cloud API integrations for each operator. Veritas Automata’s HiveNet solution delivered unparalleled capabilities, supporting the manufacturer’s business requirements and maintaining market category leadership.

Why Hivenet? Elevating Automation to a Symphony

In the grand symphony of automation and orchestration, HiveNet stands tall as a testament to Veritas Automata’s commitment to innovation, trust, and precision. The orchestration techniques showcased in smart product manufacturing exemplify the company’s ability to solve complex challenges logically and intuitively.

For ambitious leaders and executives seeking automation solutions in industries like Life Sciences, Manufacturing, Supply Chain, and Transportation, let the symphony of HiveNet elevate your organization to new heights.