The ability to extend and enhance platforms is the key to staying ahead.

Veritas Automata's Hivenet with Kubeflow, ushering in a new era of ML Ops at scale.

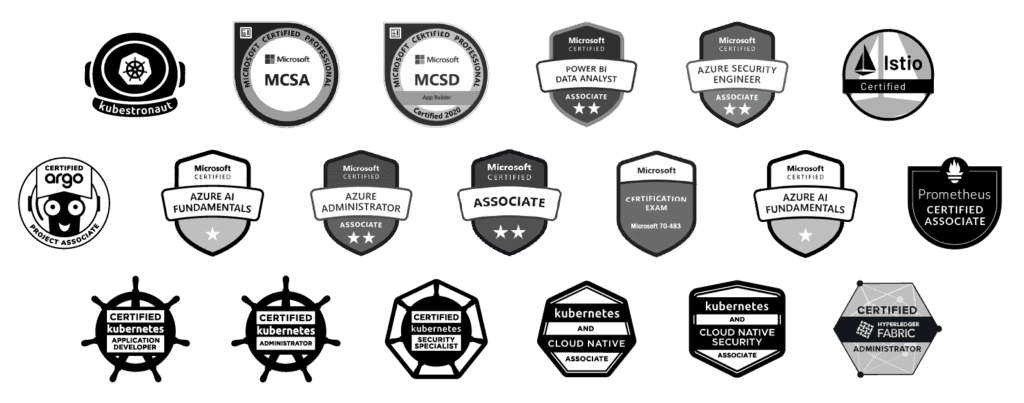

We combine the power of Kubeflow, Rancher fleet, FluxCD, and GitOps strategies within Hivenet to open doors for unparalleled control over configurations, data distribution, and the management of remote devices.

What does that mean?

Hivenet helps you managing complex, distributed systems with high efficiency, reliability, and control.

Let’s break this down even more:

Kubeflow: Think of this as a smart assistant for working with

machine learning (the technology that allows computers to learn and make decisions on their own) on a large scale. Kubeflow helps organize and run these learning tasks more smoothly on your network.

Rancher Fleet: This tool is like a manager for overseeing many groups of computers (clusters) at once. No matter how many locations you have, Rancher Fleet helps keep everything running smoothly and in sync.

FluxCD: Imagine you have a master plan or blueprint for how you want your computer network to operate. FluxCD ensures that your actual network matches this blueprint perfectly, automatically updating everything as the plan changes.

GitOps strategies: This is a modern way of managing changes and updates to your network. By treating your plans and configurations like code that can be reviewed and approved, you ensure that only the best, error-free changes are made, keeping everything secure and running smoothly.

For someone making big decisions, integrating these technologies with Hivenet means you can:

Embracing Kubeflow for ML Ops at Scale

Kubeflow, the open-source

machine learning (ML) toolkit for Kubernetes, becomes a part of Hivenet, transforming it into a powerhouse for ML Ops at scale. This integration brings forth the ability to deploy, manage, and scale ML workflows with ease. Whether you are a developer or a system operator, Kubeflow within Hivenet solves the complexities of machine learning effortlessly.

A GitOps Strategy for the Future

GitOps, an operational model for

Kubernetes and other cloud-native environments, becomes the cornerstone of Hivenet’s extended capabilities. With the ability to declare and control the desired state of the system using Git repositories as the source of truth, GitOps offers a level of transparency and reproducibility that is unmatched. Hivenet, now a GitOps-driven platform, provides a strategy to control and shape your future in the swiftly advancing tech landscape.

Hivenet's Foundation: Open Source, K3s Kubernetes, and More

The foundation of Hivenet remains unwavering in its commitment to openness, utilizing K3s Kubernetes to create pre-integrated cloud and edge clusters on the same control plane. Deployed to both cloud and edge, Hivenet’s foundation is cloud provider-agnostic, offering connected services that span

Hyperledger Fabric Blockchain for chain-of-custody and transaction management, state management for workflow efficiency, IoT device integration through ROS, and the ability to manage and control connected services remotely.

The extension of Hivenet with Kubeflow for ML Ops at scale is not just a step forward – it’s a leap into a future where control, efficiency, and innovation converge. This amalgamation of technologies within Hivenet sets the stage for a new era in platform capabilities, empowering users to shape and control their tech landscape with precision and ease.